Yes it does work without the pipeline and doesnt error

OK GOOD! ![]() (well and still bad)

(well and still bad)

Did you set the pipeline to not write the fields another fields like

"target_field" : "body_details"

Earlier today, I did remove the

"strict_json_parsing": false,

"add_to_root" : true

and replace with target_field ;

The behaviour I observed was that ; there wasnt any error however I wasnt able to view the logs in the expected data view. I only see the logs in the expected data view when I include add_to_root ;

Also I think I need to use the add_to_root as I need the output of the parsed body to be added as separates field

That is Highly unusual....

What add_to_root does is take the fields from the Body JSON and put them at the root level of the document... that is often a cause of issues... it is almost always MUCH safer to NOT add_to_root ...

add_to_rootFlag that forces the parsed JSON to be added at the top level of the document. target_field must not be set when this option is chosen.

When you put target_field it puts separate fields UNDER the target_field example if that is set to body_details

So instead of

{

"@t" : "foo"

}

it will look like

{

"body_details" : {

"@t" : "foo"

}

}

Can you try this pipeline

Please leave the default index...

try this...

{

"OTEL-JSON-PROCESSOR": {

"description": "For OTEL Web Cluster",

"processors": [

{

"json": {

"field": "Body",

"target_field:" : "body_details"

"strict_json_parsing": false,

"ignore_failure": true

}

}

]

}

}

I have another idea but please try this first...

I think it may actually be a different issue... but I want to verify the above does not work.

Try this then let me know and I will have you try another test

Next Test

Try this then let me know and I will have you try another test

Also can you just test with just this take out the JSON we want to see that the using the pipeline directive work with data streams.. . I think this may be the issue

So run this pipeline... see if the the data is ingested and then check if you see the field below added.

{

"OTEL-JSON-PROCESSOR": {

"description": "For OTEL Web Cluster",

"processors": [

{

"description" : "check if pipeline run",

"set": {

"field": "pipeline_name",

"value": "custom_pipeline

}

}

]

}

}

let me know .... I think adding the pipeline could the issue and there is another way to do it if in fact it is

Tested ; logs keep getting ingested ; no errors in the OTEL pods ; however I cant see the logs in the expected data view; only found the logs here : GET web-otel/_search and I could see that the data stream last updated time was Last updated

January 31st, 2025 6:46:35 PM

Tested what? I gave you 2 test

What Data View?

Sorry I thought we agreed to leave in the default data stream which that is not so clearly not what I asked...

I don't think I can help anymore unless you do the tests I ask.. and provide full response... in methodical fashion . We have spent a lot of time... but seems that you have your own testing methodology and are not willing to take the time to respond and be methodical... I am sure we can fix this but I can't help with short non-specific responses... I am in the dark, I have spent considerable time.

Seems you are pretty skilled on this... I am sure you will figure it out...

Sincere apologies and thanks for the time and effort put in this; I actually missed the message on the first test. And I saw the message with second test. I will retry both test and revert back

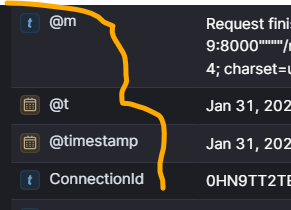

I have tried this and there was no error in the logs ; and yes it did look like you described ; here is a screenshot to confirm

So is it working?

Does that meet your needs?

What data stream did you write too?

it is working ; default data stream . Unfortunately it doesnt fully; is there a way I can have just the field without having the body_details attached to it ; that was the main reason I was using add_to_root in the first place ; if there is a way to achieve that ; that would be very helpful to me ;

Thanks to your help; I have been able to deduce the error appears only when "add_to_root" is in the pipeline

So what is happening is that when you set add_to_root there is some field that you are trying to add to the root that is causing the issue... I do not know which one it is .

As I mentioned above add_to_root is a common source of issues... It is Unclear why to me so important to have the fields at the root.

If you are set on it...

To debug leave the json processor sending to the body_details

Then You can start moving/copying the fields 1 by 1 to the root of the document until you figure out which one is causing the issue..

You can use the set or rename processor

Then when you figure out which is the bad field you will need to decide what to do.

I will take a quick look ... Provide me a full JSON of the document that works with the target field body_details but doesn't work with add_to_root ... Maybe I will be able to see it

Thanks for the detailed explanation . I'll provide the log that works with the body_details below. However to be completely honest I dont know know the ones that doesnt . This is because with add_to_root we still see logs in kibana however our fear is that some logs might be dropped as a result of the error message we get in the otel pods. If you get what I mean

Log that work without error in pods via body_details

{

"Attributes.time": [

"2025-01-31T21:08:03.52234587Z"

""

]

}

}

Yes this is what make it very hard.... The document one you just gave me seemed to work as I reran to set to add_to_root and it worked but SOME of the messages I suspect has a field that is in conflict can be very very hard to find... Hence why do not recommend add_to_root ....

You are going to need to find some message that fail and address them...

What makes it worse... is maybe you get other messages that are different and they fail for a different reason / different field.

I am not sure why you want the fields in the root... it does not change any analysis and is much safer / less error prone. We heavily recommend agains this unless you have very very structured and known logs.

Thanks once again. So basically I want the fields in the body extracted without any prefix just like in the screenshot below ; If I can do that without add_to_root . I will surely not use add_to_root ; any suggestions on how to go about that? The only method i think i can use is using json processor and set processor maybe? any suggestions will be highly appreciated. Thanks once again for all the help

Yes, you will need to do what you said ... it will be a bit tedious ... there is no simple solution.

(again, this is why we do not recommend it unless you have very "well-behaved/understood" logs)

See code below

I would expand with the json processor and put in another field and the use rename and work your way through until you find one that breaks...

Example

POST _ingest/pipeline/_simulate

{

"pipeline": {

"processors": [

{

"json": {

"field": "Body",

"target_field": "body_details"

}

},

{

"rename": {

"field": "body_details.foo",

"target_field": "foo"

}

}

]

},

"docs": [

{

"_source": {

"Body": """{"foo" : "bar", "other_field" : "other_value"}"""

}

}

]

}

#Result

{

"docs": [

{

"doc": {

"_index": "_index",

"_version": "-3",

"_id": "_id",

"_source": {

"body_details": {

"other_field": "other_value"

},

"Body": """{"foo" : "bar", "other_field" : "other_value"}""",

"foo": "bar"

},

"_ingest": {

"timestamp": "2025-02-01T23:08:34.756019672Z"

}

}

}

]

}

BTW if you do not set target OR add_to_root... it will replace the field itself... perhaps that will work for you

POST _ingest/pipeline/_simulate

{

"pipeline": {

"processors": [

{

"json": {

"field": "Body"

}

}

]

},

"docs": [

{

"_source": {

"Body": """{"foo" : "bar", "other_field" : "other_value"}"""

}

}

]

}

# result

{

"docs": [

{

"doc": {

"_index": "_index",

"_version": "-3",

"_id": "_id",

"_source": {

"Body": {

"other_field": "other_value",

"foo": "bar"

}

},

"_ingest": {

"timestamp": "2025-02-01T23:08:05.228062445Z"

}

}

}

]

}

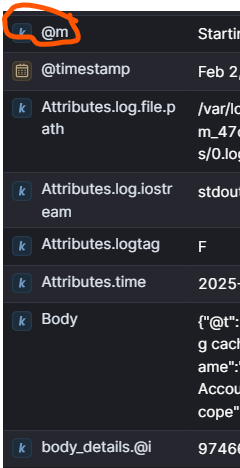

Using the 1st Ingest pipeline with the rename option as suggested ; I was able to achieve what I wanted as I renamed body_details.@m to @m for a test ; find screenshot attached

; I tried for body_details.@RequestId. However got error in the otel pod logs ; also same error type for both fields and both keep appearing as expected in Kibana making me wonder whats actually happening behind the scene

2025-02-02T11:24:33.551Z error elasticsearchexporter@v0.115.0/bulkindexer.go:346 failed to index document {"kind": "exporter", "data_type": "logs", "name": "elasticsearch/accounts", "index": "logs-generic-default", "error.type": "illegal_argument_exception", "error.reason": "field [body_details.@m] doesn't exist"}

I have set ignore_failure to true and seems to have stopped seeing the error in the pods ; I guess this won't result to any missing logs/logs being dropped if this doesnt that should suffice for me; although still curious on the above error message

here is the pipeline :

[

{

"json": {

"field": "Body",

"target_field": "body_details",

"strict_json_parsing": false,

"ignore_failure": true

}

},

{

"rename": {

"field": "body_details.@m",

"target_field": "@m",

"ignore_failure": true

}

},

Thanks--

So I tried the first Option and that work has expected ; I was able to rename a field body_details.@m to @m . PFA screenshot to confirm

However, there is an issue ; so I got this error message in the otel pods

2025-02-02T11:24:33.551Z error elasticsearchexporter@v0.115.0/bulkindexer.go:346 failed to index document {"kind": "exporter", "data_type": "logs", "name": "elasticsearch/accounts", "index": "logs-generic-default", "error.type": "illegal_argument_exception", "error.reason": "field [body_details.@m] doesn't exist"}

I was able to get around this error by including the "ignore_failure" to true in the rename processor . I am a bit concern if this error doesnt lead to dropping/missing logs/failure to ingest logs. If it doesnt I am good to use the method ; like I said no more errors in the log atm just needs some clarity on the above error; Thanks once again

I suggest you take a careful look at the processors p documentation, other options there that will help with this. For example, you probably need to set ignore_missing to true for every rename.

That way if the field doesn't exist it won't throw an error

Thanks Stephen -- All sorted