Elasticstack 7.3

I did a bulk import some info from shodan in json form, this is one of the hosts...

{

"host": {

"data": [

{

"banner": "421 4.3.2 Service not available\r\n",

"port": 25,

"product": "Microsoft Exchange 2010 smtpd",

"shodan_module": "smtp"

}

],

"hostname": "smtp21",

"ip": "ip address",

"org": "organization",

"os": "None given",

"updated": "2019-08-07T11:07:00.616447",

"vulns": [

"CVE-2018-8581"

]

}

}

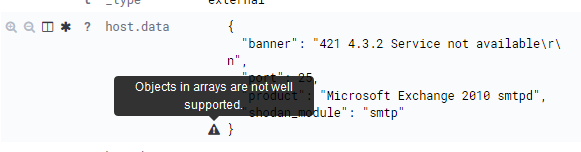

But I get this in kibana...

it seemed to go into ES OK and created the index with the right fields

I know this used to be a kibana problem, and I found this plugin-

but it only goes up to 6.4.2

and I guess this is related to it -

but it seems to be around a year old.

This is the bulk python code I used that does seem to get the data in there -

import requests, json, os

from elasticsearch import Elasticsearch, helpers

from pprint import pprint

import uuid

user = 'username'

password = 'password'

# ES = "http://ipaddress:9200"

es = Elasticsearch([{'host': 'ipaddress', 'port': 9200}],http_auth=(user, password))

data = r'/mnt/c/Users/money/Documents/Python/modules/data.json'

with open(data, "r") as json_file:

nodes = json.load(json_file)

actions = [

{

"_index" : "shodan",

"_type" : "external",

"_id" : uuid.uuid4(),

"_source" : node

}

for node in nodes['hosts']

]

try:

response = helpers.bulk(es, actions, index="shodan",doc_type='_doc')

print ("\nRESPONSE:", response)

except Exception as e:

print("\nERROR:", e)

Is there no way to fix I guess what amounts to an array within an array?

Sorry for such a long topic, I can't believe no one has come up with a good solution yet.

Is the easiest solution just to use filebeat?