We take snapshots at hourly basis, it takes few seconds to complete, sometimes a bit more. The index size is around 1G.

Here the logs from all nodes around network spike incident

2022-12-16T17:19:28.85976671Z stdout F {"type": "server", "timestamp": "2022-12-16T17:19:28,859Z", "level": "INFO", "component": "o.e.m.j.JvmGcMonitorService", "cluster.name": "es", "node.name": "es-data-azb-spot-v1-2", "message": "[gc][262464] overhead, spent [278ms] collecting in the last [1s]", "cluster.uuid": "ro-B1E7ZRcWafSbSXik7Ew", "node.id": "vf1m25zjS3yK7edG4pmANQ" }

2022-12-16T17:19:28.344216433Z stdout F {"type": "index_search_slowlog", "timestamp": "2022-12-16T17:19:28,343Z", "level": "INFO", "component": "i.s.s.query", "cluster.name": "es", "node.name": "es-data-azb-spot-v1-2", "message": "[search.index.v4][0]", "took": "1s", "took_millis": "1001", "total_hits": "0+ hits", "types": "[]", "stats": "[]", "search_type": "QUERY_THEN_FETCH", "total_shards": "1", "source": "{\"from\":396025,\"size\":35,\"timeout\":\"10s\",\"query\":{\"match_all\":{\"boost\":1.0}},\"sort\":[{\"publishedAt\":{\"order\":\"desc\"}}],\"track_total_hits\":-1}", "cluster.uuid": "ro-B1E7ZRcWafSbSXik7Ew", "node.id": "vf1m25zjS3yK7edG4pmANQ" }

2022-12-16T17:19:25.18829492Z stdout F {"type": "index_search_slowlog", "timestamp": "2022-12-16T17:19:25,188Z", "level": "INFO", "component": "i.s.s.query", "cluster.name": "es", "node.name": "es-data-azb-ondemand-v1-0", "message": "[search.index.v4][0]", "took": "1.1s", "took_millis": "1106", "total_hits": "0+ hits", "types": "[]", "stats": "[]", "search_type": "QUERY_THEN_FETCH", "total_shards": "1", "source": "{\"from\":402115,\"size\":35,\"timeout\":\"10s\",\"query\":{\"match_all\":{\"boost\":1.0}},\"sort\":[{\"publishedAt\":{\"order\":\"desc\"}}],\"track_total_hits\":-1}", "cluster.uuid": "ro-B1E7ZRcWafSbSXik7Ew", "node.id": "jHR-y1SaTB6_EE9mDBMTHQ" }

2022-12-16T17:19:24.398582927Z stdout F {"type": "index_search_slowlog", "timestamp": "2022-12-16T17:19:24,398Z", "level": "INFO", "component": "i.s.s.query", "cluster.name": "es", "node.name": "es-data-azb-spot-v1-2", "message": "[search.index.v4][0]", "took": "1s", "took_millis": "1046", "total_hits": "0+ hits", "types": "[]", "stats": "[]", "search_type": "QUERY_THEN_FETCH", "total_shards": "1", "source": "{\"from\":405860,\"size\":35,\"timeout\":\"10s\",\"query\":{\"match_all\":{\"boost\":1.0}},\"sort\":[{\"publishedAt\":{\"order\":\"desc\"}}],\"track_total_hits\":-1}", "cluster.uuid": "ro-B1E7ZRcWafSbSXik7Ew", "node.id": "vf1m25zjS3yK7edG4pmANQ" }

2022-12-16T17:19:24.23656946Z stdout F {"type": "server", "timestamp": "2022-12-16T17:19:24,236Z", "level": "INFO", "component": "o.e.m.j.JvmGcMonitorService", "cluster.name": "es", "node.name": "es-data-azb-ondemand-v1-0", "message": "[gc][880123] overhead, spent [320ms] collecting in the last [1s]", "cluster.uuid": "ro-B1E7ZRcWafSbSXik7Ew", "node.id": "jHR-y1SaTB6_EE9mDBMTHQ" }

2022-12-16T17:19:23.858555884Z stdout F {"type": "server", "timestamp": "2022-12-16T17:19:23,858Z", "level": "INFO", "component": "o.e.m.j.JvmGcMonitorService", "cluster.name": "es", "node.name": "es-data-azb-spot-v1-2", "message": "[gc][262459] overhead, spent [294ms] collecting in the last [1s]", "cluster.uuid": "ro-B1E7ZRcWafSbSXik7Ew", "node.id": "vf1m25zjS3yK7edG4pmANQ" }

2022-12-16T17:16:23.842077266Z stdout F {"type": "index_search_slowlog", "timestamp": "2022-12-16T17:16:23,841Z", "level": "INFO", "component": "i.s.s.query", "cluster.name": "es", "node.name": "es-data-azb-spot-v1-0", "message": "[search.index.v4][0]", "took": "1s", "took_millis": "1029", "total_hits": "0+ hits", "types": "[]", "stats": "[]", "search_type": "QUERY_THEN_FETCH", "total_shards": "1", "source": "{\"from\":382375,\"size\":35,\"timeout\":\"10s\",\"query\":{\"match_all\":{\"boost\":1.0}},\"sort\":[{\"publishedAt\":{\"order\":\"desc\"}}],\"track_total_hits\":-1}", "cluster.uuid": "ro-B1E7ZRcWafSbSXik7Ew", "node.id": "lFc-GfxPTUW6W6G8srGipw" }

2022-12-16T17:16:23.167357766Z stdout F {"type": "server", "timestamp": "2022-12-16T17:16:23,167Z", "level": "INFO", "component": "o.e.m.j.JvmGcMonitorService", "cluster.name": "es", "node.name": "es-data-azb-spot-v1-2", "message": "[gc][262279] overhead, spent [252ms] collecting in the last [1s]", "cluster.uuid": "ro-B1E7ZRcWafSbSXik7Ew", "node.id": "vf1m25zjS3yK7edG4pmANQ" }

2022-12-16T17:16:21.149265959Z stdout F {"type": "server", "timestamp": "2022-12-16T17:16:21,149Z", "level": "INFO", "component": "o.e.m.j.JvmGcMonitorService", "cluster.name": "es", "node.name": "es-data-azb-spot-v1-0", "message": "[gc][784665] overhead, spent [371ms] collecting in the last [1s]", "cluster.uuid": "ro-B1E7ZRcWafSbSXik7Ew", "node.id": "lFc-GfxPTUW6W6G8srGipw" }

2022-12-16T17:16:20.179422575Z stdout F {"type": "index_search_slowlog", "timestamp": "2022-12-16T17:16:20,179Z", "level": "INFO", "component": "i.s.s.query", "cluster.name": "es", "node.name": "es-data-azb-spot-v1-1", "message": "[search.index.v4][0]", "took": "1s", "took_millis": "1083", "total_hits": "0+ hits", "types": "[]", "stats": "[]", "search_type": "QUERY_THEN_FETCH", "total_shards": "1", "source": "{\"from\":389970,\"size\":35,\"timeout\":\"10s\",\"query\":{\"match_all\":{\"boost\":1.0}},\"sort\":[{\"publishedAt\":{\"order\":\"desc\"}}],\"track_total_hits\":-1}", "cluster.uuid": "ro-B1E7ZRcWafSbSXik7Ew", "node.id": "N7YJUmUpSPKBj5p15WGokw" }

2022-12-16T17:16:19.908592789Z stdout F {"type": "index_search_slowlog", "timestamp": "2022-12-16T17:16:19,908Z", "level": "INFO", "component": "i.s.s.query", "cluster.name": "es", "node.name": "es-data-azb-spot-v1-2", "message": "[search.index.v4][0]", "took": "1s", "took_millis": "1063", "total_hits": "0+ hits", "types": "[]", "stats": "[]", "search_type": "QUERY_THEN_FETCH", "total_shards": "1", "source": "{\"from\":383740,\"size\":35,\"timeout\":\"10s\",\"query\":{\"match_all\":{\"boost\":1.0}},\"sort\":[{\"publishedAt\":{\"order\":\"desc\"}}],\"track_total_hits\":-1}", "cluster.uuid": "ro-B1E7ZRcWafSbSXik7Ew", "node.id": "vf1m25zjS3yK7edG4pmANQ" }

2022-12-16T17:16:18.537198311Z stdout F {"type": "index_search_slowlog", "timestamp": "2022-12-16T17:16:18,536Z", "level": "INFO", "component": "i.s.s.query", "cluster.name": "es", "node.name": "es-data-azb-spot-v1-1", "message": "[search.index.v4][0]", "took": "1s", "took_millis": "1098", "total_hits": "0+ hits", "types": "[]", "stats": "[]", "search_type": "QUERY_THEN_FETCH", "total_shards": "1", "source": "{\"from\":378175,\"size\":35,\"timeout\":\"10s\",\"query\":{\"match_all\":{\"boost\":1.0}},\"sort\":[{\"publishedAt\":{\"order\":\"desc\"}}],\"track_total_hits\":-1}", "cluster.uuid": "ro-B1E7ZRcWafSbSXik7Ew", "node.id": "N7YJUmUpSPKBj5p15WGokw" }

2022-12-16T17:16:18.059858155Z stdout F {"type": "server", "timestamp": "2022-12-16T17:16:18,059Z", "level": "INFO", "component": "o.e.m.j.JvmGcMonitorService", "cluster.name": "es", "node.name": "es-data-azb-spot-v1-0", "message": "[gc][784662] overhead, spent [387ms] collecting in the last [1s]", "cluster.uuid": "ro-B1E7ZRcWafSbSXik7Ew", "node.id": "lFc-GfxPTUW6W6G8srGipw" }

2022-12-16T17:13:37.949263719Z stdout F {"type": "index_search_slowlog", "timestamp": "2022-12-16T17:13:37,949Z", "level": "INFO", "component": "i.s.s.query", "cluster.name": "es", "node.name": "es-data-azb-spot-v1-1", "message": "[search.index.v4][0]", "took": "1s", "took_millis": "1023", "total_hits": "0+ hits", "types": "[]", "stats": "[]", "search_type": "QUERY_THEN_FETCH", "total_shards": "1", "source": "{\"from\":358295,\"size\":35,\"timeout\":\"10s\",\"query\":{\"match_all\":{\"boost\":1.0}},\"sort\":[{\"publishedAt\":{\"order\":\"desc\"}}],\"track_total_hits\":-1}", "cluster.uuid": "ro-B1E7ZRcWafSbSXik7Ew", "node.id": "N7YJUmUpSPKBj5p15WGokw" }

2022-12-16T17:13:37.475298023Z stdout F {"type": "server", "timestamp": "2022-12-16T17:13:37,474Z", "level": "INFO", "component": "o.e.m.j.JvmGcMonitorService", "cluster.name": "es", "node.name": "es-data-azb-spot-v1-2", "message": "[gc][262114] overhead, spent [251ms] collecting in the last [1s]", "cluster.uuid": "ro-B1E7ZRcWafSbSXik7Ew", "node.id": "vf1m25zjS3yK7edG4pmANQ" }

2022-12-16T17:10:37.06971855Z stdout F {"type": "server", "timestamp": "2022-12-16T17:10:37,069Z", "level": "INFO", "component": "o.e.m.j.JvmGcMonitorService", "cluster.name": "es", "node.name": "es-data-azb-ondemand-v1-2", "message": "[gc][879829] overhead, spent [259ms] collecting in the last [1s]", "cluster.uuid": "ro-B1E7ZRcWafSbSXik7Ew", "node.id": "kv_7iljPS_uWogNdpcA9QA" }

2022-12-16T17:08:14.470691907Z stdout F {"type": "server", "timestamp": "2022-12-16T17:08:14,470Z", "level": "INFO", "component": "o.e.m.j.JvmGcMonitorService", "cluster.name": "es", "node.name": "es-data-azb-spot-v1-2", "message": "[gc][261792] overhead, spent [263ms] collecting in the last [1s]", "cluster.uuid": "ro-B1E7ZRcWafSbSXik7Ew", "node.id": "vf1m25zjS3yK7edG4pmANQ" }

this is again a network chart per node at that same time range

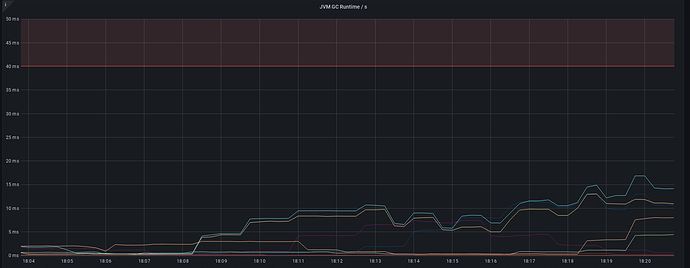

This is GC runtime /s graph at the time time range, it's way too high than our baseline, but still not critical (less than 40ms)

This is our GC runtime/s now