Kibana + Elastic Search + APM Server version: 9.0.1

Original install method: Docker

OTEL Contrib Version: 0.128.0

Redis Version: 8.0.2-alpine3.21

Steps to reproduce:

- deploy the services in docker

- run open telemetry collector with below configuration

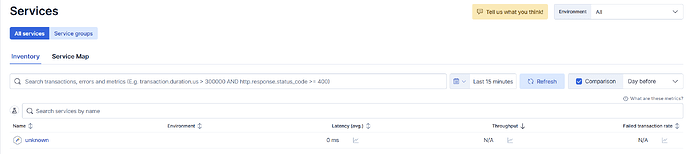

- check the services in APM Service section

receivers:

otlp:

protocols:

grpc:

endpoint: "0.0.0.0:4317"

redis:

endpoint: "redis:6379"

collection_interval: 2s

tls:

insecure_skip_verify: true

insecure: true

...

service:

metrics:

receivers:

- otlp

- redis

processors: [batch]

exporters:

- otlp/elastic

- debug

Errors in APM UI:

All the metrics will be received with service name as unknown.

Is there any default dashboards to view redis metrics? possibly inside APM UI ?

What is the best practice

Provide logs and/or server output (if relevant):

{

"_index": ".ds-metrics-apm.app.unknown-default-2025.06.18-000001",

"_id": "FAwKg5cBonjAsBhFCit6",

"_version": 1,

"_source": {

"observer": {

"hostname": "6d2236ca0a04",

"type": "apm-server",

"version": "9.0.1"

},

"agent": {

"name": "otlp",

"version": "unknown"

},

"@timestamp": "2025-06-18T12:36:01.775Z",

"data_stream": {

"namespace": "default",

"type": "metrics",

"dataset": "apm.app.unknown"

},

"service": {

"framework": {

"name": "github.com/open-telemetry/opentelemetry-collector-contrib/receiver/redisreceiver",

"version": "0.128.0"

},

"name": "unknown",

"language": {

"name": "unknown"

}

},

"redis.db.avg_ttl": 0,

"metricset": {

"name": "app"

},

"event": {},

"redis.db.expires": 0,

"redis.db.keys": 2,

"labels": {

"redis_version": "8.0.2",

"db": "0"

}

},

"fields": {

"service.framework.version": [

"0.128.0"

],

"redis.db.expires": [

0

],

"redis.db.keys": [

2

],

"service.language.name": [

"unknown"

],

"agent.name.text": [

"otlp"

],

"processor.event": [

"metric"

],

"agent.name": [

"otlp"

],

"metricset.name.text": [

"app"

],

"labels.db": [

"0"

],

"service.name": [

"unknown"

],

"service.framework.name": [

"github.com/open-telemetry/opentelemetry-collector-contrib/receiver/redisreceiver"

],

"data_stream.namespace": [

"default"

],

"service.language.name.text": [

"unknown"

],

"labels.redis_version": [

"8.0.2"

],

"data_stream.type": [

"metrics"

],

"observer.hostname": [

"6d2236ca0a04"

],

"service.framework.name.text": [

"github.com/open-telemetry/opentelemetry-collector-contrib/receiver/redisreceiver"

],

"metricset.name": [

"app"

],

"@timestamp": [

"2025-06-18T12:36:01.775Z"

],

"observer.type": [

"apm-server"

],

"observer.version": [

"9.0.1"

],

"redis.db.avg_ttl": [

0

],

"service.name.text": [

"unknown"

],

"data_stream.dataset": [

"apm.app.unknown"

],

"agent.version": [

"unknown"

]

}

}