Hello,

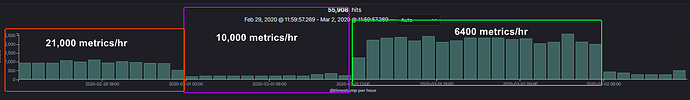

I'm trying to make sense of the CW:GMD-Metrics usage from my usage report. It currently is indicating it is using 10k metrics every hour, but I have no idea where that number is coming from. Looks farther back in the report, there are some points it was using 21k metrics.

These seem like what I would expect if it was pulling all metrics in the namespaces I'm working with.

| Service | Operation | UsageType | Resource | StartTime | EndTime | UsageValue |

|---|---|---|---|---|---|---|

| AmazonCloudWatch | GetMetricData | CW:GMD-Metrics | 3/1/2020 10:00 | 3/1/2020 11:00 | 10560 | |

| AmazonCloudWatch | GetMetricData | CW:GMD-Requests | 3/1/2020 10:00 | 3/1/2020 11:00 | 240 |

For context, there are 13 APIs and 6 DynamoDB tables data is being pulled from. If I understand how GMD is charged correctly, this should only come out to 2280 metrics/hr. I'm using at least 5x that.

Config:

metricbeat.modules:

- module: aws

period: 60s

access_key_id: '${AWS_ACCESS_KEY_ID_DATAPRE}'

secret_access_key: '${AWS_SECRET_ACCESS_KEY_DATAPRE}'

tags: ["pre"]

metricsets:

- cloudwatch

metrics:

- namespace: AWS/ApiGateway

name: ["Latency", "IntegrationLatency"]

statistic: ["Average", "Sum"]

region:

- us-east-1

- module: aws

period: 60s

access_key_id: '${AWS_ACCESS_KEY_ID_DATAPRE}'

secret_access_key: '${AWS_SECRET_ACCESS_KEY_DATAPRE}'

metricsets:

- cloudwatch

metrics:

- namespace: AWS/DynamoDB

name: ["ConsumedReadCapacityUnits", "ConsumedWriteCapacityUnits"]

tags.resource_type_filter: dynamodb:table

statistic: ["Average", "Sum"]

tags:

- key: "Tenant"

value: "tenant1"