Hello @unico Welcome to the community.

Apologies this seemed so hard. Your CSV need a little parsing.

Plus with geo_point data you need to define a mapping (data type) first.

In Kibana -> Dev Tools

Create a mapping important because geo_point needs to be defined ahead of time.

PUT my-discuss-connexions/

{

"mappings": {

"properties": {

"connexion": {

"type": "long"

},

"port": {

"type": "long"

},

"ip": {

"type": "ip"

},

"pais": {

"type": "keyword"

},

"pais_code": {

"type": "keyword"

},

"geoip": { <!--- This is the important part

"properties": {

"location": {

"type": "geo_point"

}

}

}

}

}

}

Logstash

Your IPs would probably not GEOIP because the port was attached.

# discuss-csv-ip-data.csv

input {

file {

path => "/Users/sbrown/workspace/sample-data/discuss/csv-ip-data.csv"

start_position => "beginning"

sincedb_path => "/dev/null"

}

}

filter {

dissect {

mapping => {

"message" => "%{pais_code},%{pais}; %{ip}:%{port}; %{connexion}"

}

}

geoip {

source => "ip"

}

}

output {

stdout {codec => rubydebug}

elasticsearch {

hosts => ["localhost:9200"]

index => "my-discuss-connexions"

}

}

$ sudo /Users/sbrown/workspace/elastic-install/7.13.0/logstash-7.13.0/bin/logstash -r -f ./discuss-csv-ip-data.conf

Sample output

{

"@version" => "1",

"message" => "CH,Switzerland; 194.12.140.214:143; 1399",

"pais_code" => "CH",

"path" => "/Users/sbrown/workspace/sample-data/discuss/csv-ip-data.csv",

"geoip" => {

"country_code3" => "CH",

"continent_code" => "EU",

"location" => {

"lon" => 8.1551,

"lat" => 47.1449

},

"ip" => "194.12.140.214",

"latitude" => 47.1449,

"timezone" => "Europe/Zurich",

"longitude" => 8.1551,

"country_code2" => "CH",

"country_name" => "Switzerland"

},

"@timestamp" => 2021-06-18T14:58:25.682Z,

"connexion" => "1399",

"port" => "143",

"host" => "ceres",

"pais" => "Switzerland",

"ip" => "194.12.140.214"

}

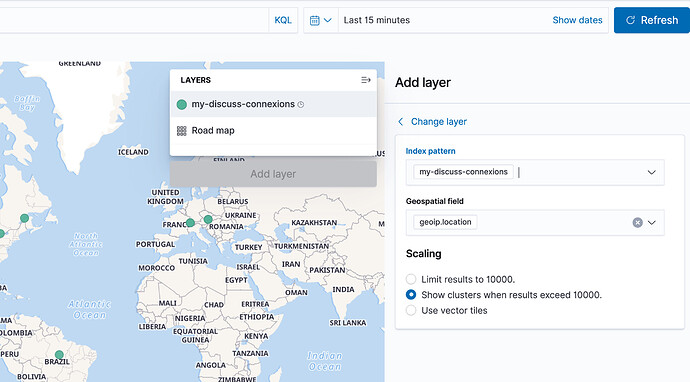

Create Index Pattern If you choose to use the time fields you will need to be aware of that on the map...

Add to Map in Kibana (Sorry I am not a Grafana Expert)