Hi Community,

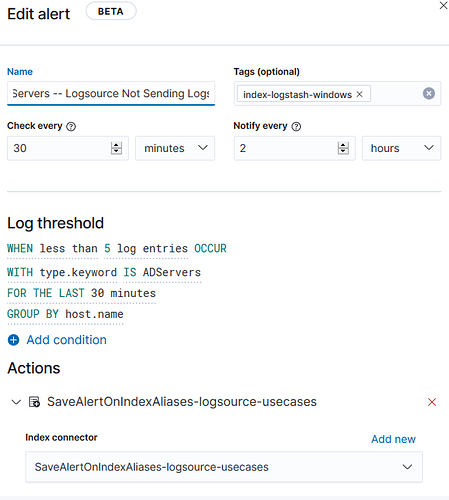

Alert condition and other details are as

In above screenshot,

type.keyword IS ADServers (type field type is string/text in elasticsearch field mapping)

I also tried it too

type IS ADServers (type field type is string/text in elasticsearch field mapping)

type is my custom extracted field using logstash that shows the type of logsource like AD servers here above. host.name field contains name of AD server

Actual Issue:

first condition type.keyword IS ADServers doesn't work. If it works then it should return active directory server names in host.name but it returns all servers in host.name field.

For example, A, B and C are the AD servers, type IS ADServers means it should return results A, B and C because I'm going to group on host.name. But here it's returning results of all servers like A, B, C, D, E, F, .... Z. That means the condition type IS ADServers doesn't work

P.S:

1- I can see both fields in real time logs like type and host.name.

2- If I apply filter like type: ADServers I can see the json documents in kibana on the index