Issue: I have the logs getting logged into my log file and each log entry has the time information. The time field in the log is not getting recognized in Elasticssearch and hence I am not able to select this time information while creating my Kibana indexes.

**Environment:**

Ubuntu 18.04

Fluentd 1.12.3

td-agent 4 (curl -L https://toolbelt.treasuredata.com/sh/install-ubuntu-bionic-td-agent4.sh | sh)

elasticsearch-7.12.1

kibana-7.12.1

**td-agent.conf:**

<source>

@type tail

path /var/log/sau.log

pos_file /var/log/td-agent/sau.pos

read_from_head true

tag log

<parse>

@type regexp

expression /^\[(?<logtime>[^\]]*)\] (?<name>[^ ]*) (?<title>[^ ]*) (?<id>\d*)$/

time_key logtime

keep_time_key true

time_format %Y-%m-%d %H:%M:%S %z

types id:integer

</parse>

</source>

<match *.**>

@type elasticsearch

host localhost

port 9200

index_name sauindx1

</match>

log entry sample: [2013-02-28 12:00:00 +0900] sau engineer 1

log entry simulation command: echo “[2013-02-28 12:00:00 +0900] sau engineer 1” | tee -a /var/log/sau.log

Official doc referred: regexp - Fluentd

log entry in stdout through fluentd:

2013-02-28 03:00:00.000000000 +0000 log: {“logtime”:“2013-02-28 12:00:00 +0900”,“name”:“sau”,“title”:“engineer”,“id”:1}

As shown in the above line, when I do an stdout print from Fluentd, I get the above line which clearly indicates that the time field defined by me is recognized properly.

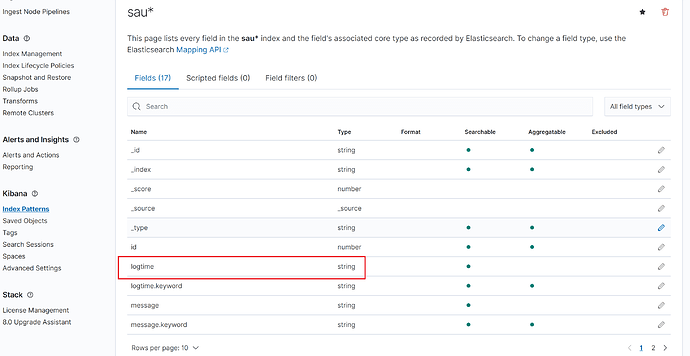

However when I divert the logs to Elasticsearch and then create the Kibana index, I am not able to get the time field recognized.

Is there anything I am doing wrong here? Needed some guidance on this please.

Reference links:

Elasticsearch installation – Install Elasticsearch with Debian Package | Elasticsearch Guide [7.12] | Elastic

Kibana installation – Install Kibana with Debian package | Kibana Guide [7.12] | Elastic

Fluentd installation – Install by DEB Package (Debian/Ubuntu) - Fluentd

Regex expression – regexp - Fluentd