I would use your own index, template, mapping, pipeline etc.

Here is a template (it is legacy you can figure out the new components)

DELETE _ingest/pipeline/stock-ticker-pipeline

PUT /_ingest/pipeline/stock-ticker-pipeline

{

"description": "Stock Ticker Pipeline",

"version": 0,

"processors": [

{

"grok": {

"field": "message",

"trace_match": true,

"patterns": [

"""%{GREEDYDATA:irrelevant_data}Stock = %{GREEDYDATA:Stock}

%{GREEDYDATA:irrelevant_data}Date = %{GREEDYDATA:date}

%{GREEDYDATA:irrelevant_data}Volume=%{NUMBER:Volume}

%{GREEDYDATA:irrelevant_data}Low=%{BASE10NUM:Low}

%{GREEDYDATA:irrelevant_data}High=%{BASE10NUM:High}

%{GREEDYDATA:irrelevant_data}Open=%{BASE10NUM:Open}

%{GREEDYDATA:irrelevant_data}Close=%{BASE10NUM:Close}"""

]

}

},

{

"convert" : {

"field" : "Volume",

"type": "long"

}

},

{

"convert" : {

"field" : "Low",

"type": "double"

}

},

{

"convert" : {

"field" : "High",

"type": "double"

}

},

{

"convert" : {

"field" : "Open",

"type": "double"

}

},

{

"convert" : {

"field" : "Close",

"type": "double"

}

},

{

"date": {

"field": "date",

"target_field": "busDate",

"formats": [

"yyyy-MM-dd"

]

}

}

]

}

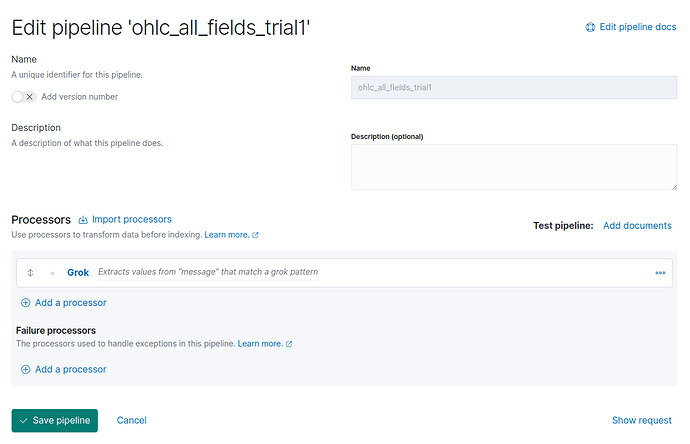

Here is a pipeline

DELETE _ingest/pipeline/stock-ticker-pipeline

PUT /_ingest/pipeline/stock-ticker-pipeline

{

"description": "Stock Ticker Pipeline",

"version": 0,

"processors": [

{

"grok": {

"field": "message",

"trace_match": true,

"patterns": [

"""%{GREEDYDATA:irrelevant_data}Stock = %{GREEDYDATA:Stock}

%{GREEDYDATA:irrelevant_data}Date = %{GREEDYDATA:date}

%{GREEDYDATA:irrelevant_data}Volume=%{NUMBER:Volume}

%{GREEDYDATA:irrelevant_data}Low=%{BASE10NUM:Low}

%{GREEDYDATA:irrelevant_data}High=%{BASE10NUM:High}

%{GREEDYDATA:irrelevant_data}Open=%{BASE10NUM:Open}

%{GREEDYDATA:irrelevant_data}Close=%{BASE10NUM:Close}"""

]

}

},

{

"convert" : {

"field" : "Volume",

"type": "long"

}

},

{

"convert" : {

"field" : "Low",

"type": "double"

}

},

{

"convert" : {

"field" : "High",

"type": "double"

}

},

{

"convert" : {

"field" : "Open",

"type": "double"

}

},

{

"convert" : {

"field" : "Close",

"type": "double"

}

},

{

"date": {

"field": "date",

"target_field": "busDate",

"formats": [

"yyyy-MM-dd"

]

}

}

]

}

Here is a sample

POST /stock-ticker-000001/_doc/?pipeline=stock-ticker-pipeline

{

"message": """2021/06/27 15:17:49 : INFO | Stock = 0700.HK

2021/06/27 15:17:49 : INFO | Date = 2021-06-18

2021/06/27 15:17:49 : INFO | Volume=12301606

2021/06/27 15:17:49 : INFO | Low=599.5

2021/06/27 15:17:49 : INFO | High=608.0

2021/06/27 15:17:49 : INFO | Open=602.0

2021/06/27 15:17:49 : INFO | Close=603.0"""

}

Here is the result it shows the source (what you put in) and the fields (what is actually used in visualizations, aggregations etc)

GET stock-ticker-000001/_search

{

"fields": [

"*"

]

}

{

"took" : 12,

"timed_out" : false,

"_shards" : {

"total" : 1,

"successful" : 1,

"skipped" : 0,

"failed" : 0

},

"hits" : {

"total" : {

"value" : 1,

"relation" : "eq"

},

"max_score" : 1.0,

"hits" : [

{

"_index" : "stock-ticker-000001",

"_type" : "_doc",

"_id" : "RZRO43oBx8ERHzwghUHq",

"_score" : 1.0,

"_source" : {

"date" : "2021-06-18",

"High" : 608.0,

"message" : """2021/06/27 15:17:49 : INFO | Stock = 0700.HK

2021/06/27 15:17:49 : INFO | Date = 2021-06-18

2021/06/27 15:17:49 : INFO | Volume=12301606

2021/06/27 15:17:49 : INFO | Low=599.5

2021/06/27 15:17:49 : INFO | High=608.0

2021/06/27 15:17:49 : INFO | Open=602.0

2021/06/27 15:17:49 : INFO | Close=603.0""",

"irrelevant_data" : "2021/06/27 15:17:49 : INFO | ",

"Open" : 602.0,

"busDate" : "2021-06-18T00:00:00.000Z",

"Volume" : 12301606,

"Low" : 599.5,

"Close" : 603.0,

"Stock" : "0700.HK"

},

"fields" : {

"High" : [

608.0

],

"date" : [

"2021-06-18T00:00:00.000Z"

],

"busDate" : [

"2021-06-18T00:00:00.000Z"

],

"irrelevant_data.keyword" : [

"2021/06/27 15:17:49 : INFO | "

],

"Low" : [

599.5

],

"Volume" : [

12301606

],

"Close" : [

603.0

],

"message" : [

"""2021/06/27 15:17:49 : INFO | Stock = 0700.HK

2021/06/27 15:17:49 : INFO | Date = 2021-06-18

2021/06/27 15:17:49 : INFO | Volume=12301606

2021/06/27 15:17:49 : INFO | Low=599.5

2021/06/27 15:17:49 : INFO | High=608.0

2021/06/27 15:17:49 : INFO | Open=602.0

2021/06/27 15:17:49 : INFO | Close=603.0"""

],

"Stock" : [

"0700.HK"

],

"irrelevant_data" : [

"2021/06/27 15:17:49 : INFO | "

],

"Open" : [

602.0

]

}

}

]

}

}

If you look at the mapping everything looks good

GET stock-ticker-000001/

{

"stock-ticker-000001" : {

"aliases" : { },

"mappings" : {

"properties" : {

"Close" : {

"type" : "double"

},

"High" : {

"type" : "double"

},

"Low" : {

"type" : "double"

},

"Open" : {

"type" : "double"

},

"Stock" : {

"type" : "keyword",

"ignore_above" : 256

},

"Volume" : {

"type" : "long"

},

"busDate" : {

"type" : "date"

},

"date" : {

"type" : "date"

},

"irrelevant_data" : {

"type" : "text",

"fields" : {

"keyword" : {

"type" : "keyword",

"ignore_above" : 256

}

}

},

"message" : {

"type" : "text"

}

}

},

"settings" : {

"index" : {

"routing" : {

"allocation" : {

"include" : {

"_tier_preference" : "data_content"

}

}

},

"number_of_shards" : "1",

"provided_name" : "stock-ticker-000001",

"creation_date" : "1627311211856",

"number_of_replicas" : "1",

"uuid" : "oceKzXnrSMWF2KdY0bMXug",

"version" : {

"created" : "7130499"

}

}

}

}

}

Now in the filebeat.yml you can make the output

There are many ways to many indices and roll over etc.

This is just an example using daily indices, you may need something else in the long run but this may get you started

output.elasticsearch:

hosts: ["localhost:9200"]

pipeline: stock-ticker-pipeline

index: "stock-ticker-%{+yyyy.MM.dd}"