Hello,

I am trying to understand why otel metrics don't display the labels I set in my code.

I use:

- go 1.17

- elastic/apm 7.15.1

- opentelemetry 1.0.1

- native otlp collector 0.6.0

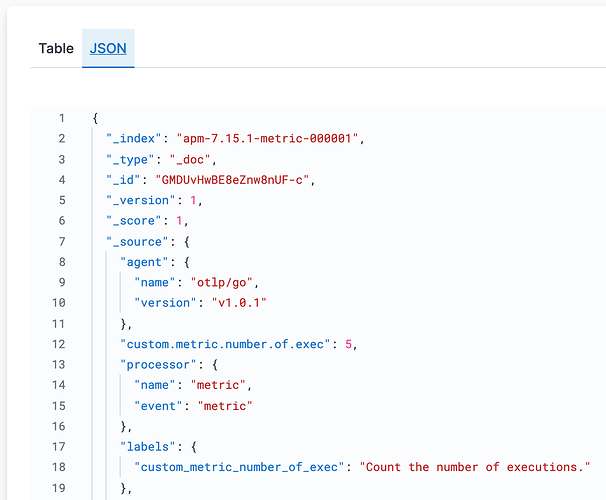

My metrics index has:

"apm-7.15.1-metric-000001" : {

"mappings" : {

"_meta" : {

"beat" : "apm",

"version" : "7.15.1"

},

"dynamic_templates" : [

{

"labels" : {

"path_match" : "labels.*",

"match_mapping_type" : "string",

"mapping" : {

"type" : "keyword"

}

}

},

My golang code for adding one to a counter and attaching a label each time the value is incremented:

warnMeter.warningCounter.Add(

warnMeter.ctx,

1,

attribute.Int("labels.code", code),

attribute.String("labels.text", text),

attribute.String("labels.server", warnMeter.server),

)

As you can see, I am trying to add one integer label and two string labels (or attributes, as the otel spec goes). Alas, I do not see any them in the index record (but I do see the counter value).

Considering a non-zero counter was only resolved on 7.15 (see the apm-server issue), is there a chance otel metric labels are not yet supported? Or am I doing something wrong?

Thanks in advance!

Ben

)

)