Hello,

Today I was playing with OpenAI connector settings in Elastic Security Serverless and I have some questions for which I didn’t found an answer in the documentation.

What connector to use?

- “OpenAI” => OpenAI connector and action | Kibana

- “AI Connector” => No docs available? (Technical Preview)

So there seem to be 2 connectors options for OpenAI? Which one should I use, is there a difference? Will the “AI Connector” replace “OpenAI” connector some day?

Tried configuring the AI Connector. My experience:

-

For the url I configured “https://api.openai.com/v1/chat/completions” and as Task Type

chat_completion -

I needed to verify my organisation in the OpenAI platform in order to use

gpt-5-2025-08-07. After verifying the “Hello World” test worked, but 15 minutes later, when I test again, I get the same “Your organization must be verified to stream this model” status 400 error. 10 minutes later it worked again. -

The minimum required privleges are not documented. I tried with restricted privileges and only gave Write to “Model capabilities”

-

Once configured, it seems not possible to edit the settings, as they are greyed out.

Soo, to check if everything works fine, I wanted to test the Attack Discovery geenration, but found out that the Connector selector shows “No options available”…

Which is weird, as I ran it a few days ago with the ELastic Managed LLM, which for some reason is no longer selectable now?

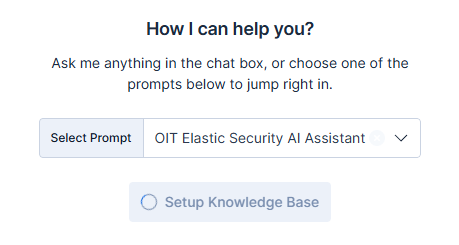

When trying the connector in Elastic Security Alerts AI Assistant, I do get the option to select both connectors:

But when asking sth in the chat I get:

Error calling connector: event: error

data: {"error":{"code":"unsupported_value","message":"Received a bad request status code for request from inference entity id [openai-chat_completion-rbsv82821ae] status [400]. Error message: [Unsupported value: 'temperature' does not support 0.2 with this model. Only the default (1) value is supported.]","param":"temperature","type":"invalid_request_error"}}

As I played around with the Python openai module some time ago, I found out that indeed, gpt-5 does not support temperature anymore. Afaik it only supports “verbosity” and “reasoning_effort" .

So does this mean gpt-5 it not yet supported? Imho it would be nice if we could get a dropdown in the Model ID which allow to only select supported models? Or at least find the supported models in the documentation.

EDIT: Made a gpt4.1 connector with the same restricted API key which does seem to work fine. Created a new System Prompt. Then I clicked the “Setup Knowledge Base” button, which did nothing , went greyed out and keeps spinning “forever”.

Any feedback to the above questions is very welcome. ![]()

Willem