I have managed to setup both Elasticsearch/Kibana with self-signed cert by following these:

Different CA | Elastic Docs

elasticsearch-certutil | Reference

With Elasticsearch/Kibana up and running, I then created a new cert for the fleet server. I transferred fleet cert, and the ca cert to the fleet server vm. I added/enrolled the fleet server with this command:

curl -L -O https://artifacts.elastic.co/downloads/beats/elastic-agent/elastic-agent-9.0.3-linux-x86_64.tar.gz

tar xzvf elastic-agent-9.0.3-linux-x86_64.tar.gz

cd elastic-agent-9.0.3-linux-x86_64

sudo ./elastic-agent install --url=https://34.55.72.62:8220 \

--fleet-server-es=https://34.172.16.38:9200 \

--fleet-server-service-token=AAEAAWVsYXN0aWMvZmxlZXQtc2VydmVyL3Rva2VuLTE3NTI4NjM3ODIwMDQ6YkVLak9oNEVSZkdYVlBWbFlKMnoyQQ \

--fleet-server-policy=fleet-server-policy \

--certificate-authorities=/etc/myfleet/certs/ca/ca.crt \

--fleet-server-es-ca=/etc/myfleet/certs/ca/ca.crt \

--fleet-server-cert=/etc/myfleet/certs/fleet/fleet.crt \

--fleet-server-cert-key=/etc/myfleet/certs/fleet/fleet.key \

--fleet-server-port=8220 \

--install-servers \

--insecure

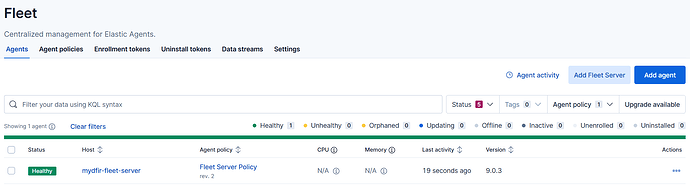

The fleet server is now successfully enrolled, the agent on the fleet is also running healthily

However, no data was sent to Elasticsearch. Checking the log of the Agent on the fleet server show this message:

{"log.level":"error","@timestamp":"2025-07-19T07:36:09.064Z","message":"Failed to connect to backoff(elasticsearch(https://34.172.16.38:9200)): Get \"https://34.172.16.38:9200\": x509: certificate signed by unknown authority","component":{"binary":"metricbeat","dataset":"elastic_agent.metricbeat","id":"http/metrics-monitoring","type":"http/metrics"},"log":{"source":"http/metrics-monitoring"},"ecs.version":"1.6.0","log.logger":"publisher_pipeline_output","log.origin":{"file.line":149,"file.name":"pipeline/client_worker.go","function":"github.com/elastic/beats/v7/libbeat/publisher/pipeline.(*netClientWorker).run"},"service.name":"metricbeat","ecs.version":"1.6.0"}

{"log.level":"info","@timestamp":"2025-07-19T07:36:09.064Z","message":"Attempting to reconnect to backoff(elasticsearch(https://34.172.16.38:9200)) with 894 reconnect attempt(s)","component":{"binary":"metricbeat","dataset":"elastic_agent.metricbeat","id":"http/metrics-monitoring","type":"http/metrics"},"log":{"source":"http/metrics-monitoring"},"service.name":"metricbeat","ecs.version":"1.6.0","log.logger":"publisher_pipeline_output","log.origin":{"file.line":140,"file.name":"pipeline/client_worker.go","function":"github.com/elastic/beats/v7/libbeat/publisher/pipeline.(*netClientWorker).run"},"ecs.version":"1.6.0"}

Why does it still say that 'certificate is signed by unknown authority', even though I included --insecure flag in the agent installation?