Hi All,

I started exploring the ELK stack and was very happy to learn and implement the ELK features for my requirements. Currently I need a help in retrieving the documents (records) of a saved search.

I am pushing my jenkins pipeline jobs console logs to elasticsearch using jenkins-logstash plugin and it's pumping the data without any problem. I am filtering my jenkins console data using a field called "ISSUE_ID".I created a saved search with the output fields being

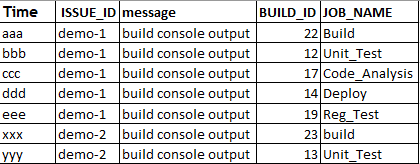

"time" "ISSUE_ID" "message" "BUILD_ID" "JOB_NAME". In kibana, I can see that my saved search is returning around 13 records for last 24 hrs. I used the content available in "Request" tab to retrieve the same no of documents(records) inside my ubuntu host using the below curl command. But it's dumping a huge amount of data.

How to retrieve the records with just the fields mentioned in saved search.

example output data what I am expecting is

The curl command is:

curl -X GET http://localhost:9200/logstash-*/_search -d request.json

The content of the request.json (the Request kibana sent to Elasticsearch from the saved search) is:

{

"highlight": {

"pre_tags": [

"@kibana-highlighted-field@"

],

"post_tags": [

"@/kibana-highlighted-field@"

],

"fields": {

"": {}

},

"require_field_match": false,

"fragment_size": 2147483647

},

"query": {

"filtered": {

"query": {

"query_string": {

"analyze_wildcard": true,

"query": "ISSUE_ID"

}

},

"filter": {

"bool": {

"must": [

{

"range": {

"@timestamp": {

"gte": 1518515132440,

"lte": 1518601532440,

"format": "epoch_millis"

}

}

}

],

"must_not": []

}

}

}

},

"size": 500,

"sort": [

{

"@timestamp": {

"order": "desc",

"unmapped_type": "boolean"

}

}

],

"aggs": {

"2": {

"date_histogram": {

"field": "@timestamp",

"interval": "30m",

"time_zone": "Asia/Kolkata",

"min_doc_count": 0,

"extended_bounds": {

"min": 1518515132440,

"max": 1518601532440

}

}

}

},

"fields": [

"",

"_source"

],

"script_fields": {},

"fielddata_fields": [

"@timestamp",

"@buildTimestamp",

"post_date"

]

}