Hello elastic community,

any idea why Kibana tries to connect to 169.254.169.254:80 for first 5-6 minutes after I start it?

I noticed this with version 7.17.9, but I think it was like this at least for all 7.17.X versions.

Hi @nisow95612. I'm not aware of Kibana trying to connect to this address. But I see that the add_cloud_metadata Beats processor can reach out to this address.

See also these links.

Yes, this IP address seems to be a "cloud metadata URL" or something.

But I am not aware of any configuration that would cause kibana need it.

It is a very standard kibana server installed from the official repository:

deb https://artifacts.elastic.co/packages/7.x/apt stable main

There are no plugins installed:

# su kibana -s /bin/sh -c "bin/kibana-plugin list"

No plugins installed.

But whenever I systemctl restart elasticsearch, these connections show up.

Also, wouldn't Beats processor run on elasticsearch ingest nodes?

When I restart elasticserach nodes they dont try to connect to 169.254.169.254.

Ah, I am wrong. Kibana does use this endpoint for telemetry collection. I believe if you opt out of telemetry (i.e. telemetry.optIn: false in your "kibana.yml") it would stop these outbound calls.

Well, thank you for the answer.

I was hoping that telemetry.sendUsageFrom: "browser" would be enough, but it isn't.

I tried disabling telemetry in Kibana advanced settings, but it wasn't enough.

I tried setting telemetry.optIn: false, but it wasn't enough.

I found that telemetry.optIn and telemetry.allowChangingOptInStatus cannot be both false at the same time, because Kibana will refuse to start with misleading error:

FATAL Error: [config validation of [telemetry].optIn]: expected value to equal [true]

I was worried it is a licensed feature, but it turns out that Telemetry settings for 7.17 are just different.

So I tried setting the 7.x-only telemetry.enabled: false, but it still has no effect.

PS: I am running the free/basic. I pushed for getting the Gold subscription, but it got discontinued.

Oh, I didn't notice this line before. This is happening when you start Elasticsearch, not Kibana? If so, we should maybe move this to the Elasticsearch Discuss forum for a more appropriate audience?

Thank you for spotting it. Unfortunately it is a typo on my side. I was already thinking about next paragraph where I mention this not happening when I restart elasticsearch.

To be clear:

When I systemctl restart kibana on the kibana server, the kibana server generates this traffic

When I systemctl restart elasticsearch on the elasticsearch servers, there is no such traffic.

When I systemctl restart elasticsearch on the kibana server, it gives "unit not found error"

Hmm, I'm not able to recreate this. Where are you seeing these requests being made? In the log file?

Ultimately, if you're not running Kibana on a cloud service VM like GCS, AWS, or Azure, these requests are to Link-local addresses meaning they are likely not accessing any external networks.

Yes. I would agree that request to a link-local address should not reach the internet. Unfortunately manufactures of all routers on the way turned out to disagree and exactly that happens: I am seeing these request on my internet uplink.

Since you repeatedly mention cloud it may be relevant that my Kibana server is a linux-KVM VM?

I confirmed that this connections attempt comes from the NodeJS/Kibana process:

# netstat -Wapnt | grep 169

tcp 0 1 10.x.y.z:45802 169.254.169.254:80 SYN_SENT 32440/node

I can see this also in my local installation and i am wondering about the need for this?

Guess a "good" tool for investigating security or information security should not create such traffic by itself?

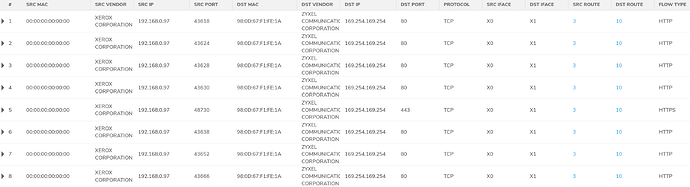

I find more than 5.000 requests in 6 hours?

"data_stream.dataset": [

"sonicwall_firewall.log"

],

"data_stream.type": [

"logs"

],

"destination.ip": [

"169.254.169.254"

],

"destination.mac": [

"98-0D-67-F1-FE-1A"

],

"destination.nat.ip": [

"169.254.169.254"

],

"destination.nat.port": [

443

],

"destination.port": [

443

],

"event.code": [

"537"

],

"event.dataset": [

"sonicwall_firewall.log"

],

"network.bytes": [

120

],

"network.packets": [

2

],

"network.protocol": [

"https"

],

"network.transport": [

"tcp"

],

Strange. My Kibana server generates these only when freshly restarted, then it stops.

So you say your Kibana server generates this always and it is really the node.JS process?

But thanks for confirming it is not just me.

PS: Is your Kibana server baremetal or a VM?

well - i have Elastic running in kali purple in a VM.

But - most of the findings came up as i integrated my firewall into the SIEM.

Currently i need to check this information - i am still a beginner and it's hard to get the right answer ![]()

What i wonder about - the request is not only comming from the kali purple vm but from others also.

Guess i will "fall back" to a wireshark trace and give feedback later ...

so more info - more confusion

the paket source is 192.168.0.97 (kali purple)

besides the fact that this should not be routed - i can see this ip on both sides of my firewall.

What i got from other sources is this info:

Special-Use Addresses

"Autoconfiguration" IP Addresses:

169.254.0.0 - 169.254.255.255

Addresses in the range 169.254.0.0 to 169.254.255.255 are used automatically by most network devices when they are configured to use IP, do not have a static IP Address assigned and are unable to obtain an IP address using DHCP.

This traffic is intended to be confined to the local network, so the administrator of the local network should look for misconfigured hosts. Some ISPs inadvertently also permit this traffic, so you may also want to contact your ISP. This is documented in RFC 6890.

i will now try to check if i have some kind of networking issue ...

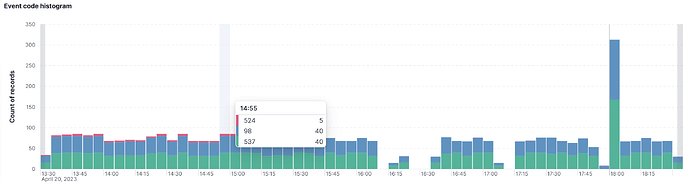

Here's my finding.

The cause for this traffiy is "elastic-agent".

I had my firewall "sniffing" and starting and stopping the machine and services.

i can reproduce the traffic and stop it also by starting and stopping the agent service.

As far as i can see there's a "one-minute-interval" for the request to be send.

Strange - there's a session established through the firewall - thought this should not be possible.

The sessions are comming every minute and than get away until the next minute interval.

Next i will try to check if this comes from an integration

And here's the traffic for 169.254.169.254. You can see the times i had the machine / service down for testing.

Aha. I don't have elastic-agent installed. That explains why you get more.

Can you check if restarting kibana service (when elastic-agent is stopped so there is no noise) creates this connection too?

PS: You can find source process by running lsof in loop:

while true; do lsof -ni; done | grep -F --line-buffered 169.254.169.254

Well, i had this running in a root terminal on my kali-purple. No output here generated.

But - at the same time i can see the paket generated every minute in my firewall paket monitor.

i stopped kibana service only - still having the pakets in my firewall. Every minute.

I restarted kibana - still no output from the still running lsof...

Then i stopped service elastic-agent -> causing silence ...

Still no reaction from lsof!!!

So the traffic is comming from service elastic-agent and stopping kibana is not stopping the traffic.

next i generated an "empty policy" and assigned to my agent kali-purple.

guess this was to much testing - i shreddered my fleet server now.

Well - guess i need to repair this first...

Ah, right. Sorry I forgot your firewall allow these connections.

I tested this lsof thing after I blackholed them, so I think the socket stays around longer time?

Anyway, to help me confirm my issue, can you please stop elastic-agent, check you have silence, restart kibana, wait a minute or two, check if traffic appears, start elastic-agent?

It will not help you with your problem, but it will help me confirm kibana itself does it too.

PS: Also sorry I forgot warning you running stuff in loop is expensive, but I found no better way so far ![]()

no problem - this is an "OnPrem" Lab environment so guess no "cost problem".

As far as i can say from my previous testing i am quite sure the traffic is generated by the agent not by kibana. I even think it is either the fleet server or my sonicwall integration running on this agent also.

As soon / long as the agent is started i do have this traffic. If i stop other services like "elasticsearch", "kibana" or "enterprise-search" this is not affecting the issue.

I redeployed my fleet agent and now the communication seems to run like before.

Need to wait until everything is stable before i can continue to test.

After some more testing i found the following:

The "1-minute" web communication is looking synchronized to alerts in kibana agent.

"@timestamp","agent.name",message

"Apr 23, 2023 @ 09:38:00.468","kali-purple","github.com/elastic/beats/v7/filebeat/input/udp/input.go:216 +0xa70"

"Apr 23, 2023 @ 09:38:00.467","kali-purple","created by github.com/elastic/beats/v7/filebeat/input/udp.newInputMetrics"

"Apr 23, 2023 @ 09:38:00.466","kali-purple","github.com/elastic/beats/v7/filebeat/input/udp/input.go:242 +0xd1"

"Apr 23, 2023 @ 09:38:00.465","kali-purple","panic: runtime error: index out of range [1] with length 0"

"Apr 23, 2023 @ 09:38:00.465","kali-purple","goroutine 173 [running]:"

"Apr 23, 2023 @ 09:38:00.465","kali-purple","github.com/elastic/beats/v7/filebeat/input/udp.procNetUDP({0xc00032cc80?, 0x1, 0x1})"

"Apr 23, 2023 @ 09:38:00.465","kali-purple","github.com/elastic/beats/v7/filebeat/input/udp/input.go:271 +0x552"

"Apr 23, 2023 @ 09:38:00.465","kali-purple","github.com/elastic/beats/v7/filebeat/input/udp.(*inputMetrics).poll(0xc000d9d200, {0xc00032cc80, 0x1, 0x1}, 0x776f6e6b20656220?, 0xc00032c640)"

"Apr 23, 2023 @ 09:36:58.634","kali-purple","created by github.com/elastic/beats/v7/filebeat/input/udp.newInputMetrics"

"Apr 23, 2023 @ 09:36:58.634","kali-purple","github.com/elastic/beats/v7/filebeat/input/udp/input.go:216 +0xa70"

"Apr 23, 2023 @ 09:36:58.633","kali-purple","github.com/elastic/beats/v7/filebeat/input/udp/input.go:271 +0x552"

"Apr 23, 2023 @ 09:36:58.633","kali-purple","github.com/elastic/beats/v7/filebeat/input/udp.(*inputMetrics).poll(0xc001581c80, {0xc0002871f0, 0x1, 0x1}, 0x2020202020202020?, 0xc000286d30)"

"Apr 23, 2023 @ 09:36:58.633","kali-purple","github.com/elastic/beats/v7/filebeat/input/udp/input.go:242 +0xd1"

"Apr 23, 2023 @ 09:36:58.632","kali-purple","panic: runtime error: index out of range [1] with length 0"

"Apr 23, 2023 @ 09:36:58.632","kali-purple","goroutine 258 [running]:"

"Apr 23, 2023 @ 09:36:58.632","kali-purple","github.com/elastic/beats/v7/filebeat/input/udp.procNetUDP({0xc0002871f0?, 0x1, 0x1})"

"Apr 23, 2023 @ 09:35:54.033","kali-purple","github.com/elastic/beats/v7/filebeat/input/udp/input.go:242 +0xd1"

"Apr 23, 2023 @ 09:35:54.033","kali-purple","created by github.com/elastic/beats/v7/filebeat/input/udp.newInputMetrics"

"Apr 23, 2023 @ 09:35:54.033","kali-purple","github.com/elastic/beats/v7/filebeat/input/udp/input.go:216 +0xa70"

"Apr 23, 2023 @ 09:35:54.032","kali-purple","panic: runtime error: index out of range [1] with length 0"

"Apr 23, 2023 @ 09:35:54.032","kali-purple","goroutine 185 [running]:"

"Apr 23, 2023 @ 09:35:54.032","kali-purple","github.com/elastic/beats/v7/filebeat/input/udp.procNetUDP({0xc0012df440?, 0x1, 0x1})"

"Apr 23, 2023 @ 09:35:54.032","kali-purple","github.com/elastic/beats/v7/filebeat/input/udp/input.go:271 +0x552"

"Apr 23, 2023 @ 09:35:54.032","kali-purple","github.com/elastic/beats/v7/filebeat/input/udp.(*inputMetrics).poll(0xc0011e2900, {0xc0012df440, 0x1, 0x1}, 0xc0005a5b80?, 0xc0012df1d0)"

"Apr 23, 2023 @ 09:34:49.391","kali-purple","panic: runtime error: index out of range [1] with length 0"

"Apr 23, 2023 @ 09:34:49.391","kali-purple","goroutine 186 [running]:"

"Apr 23, 2023 @ 09:34:49.391","kali-purple","github.com/elastic/beats/v7/filebeat/input/udp.procNetUDP({0xc000ae1890?, 0x1, 0x1})"

"Apr 23, 2023 @ 09:34:49.391","kali-purple","github.com/elastic/beats/v7/filebeat/input/udp/input.go:271 +0x552"

"Apr 23, 2023 @ 09:34:49.391","kali-purple","github.com/elastic/beats/v7/filebeat/input/udp.(*inputMetrics).poll(0xc0003d4c00, {0xc000ae1890, 0x1, 0x1}, 0x0?, 0xc000ae1620)"

"Apr 23, 2023 @ 09:34:49.391","kali-purple","github.com/elastic/beats/v7/filebeat/input/udp/input.go:242 +0xd1"

"Apr 23, 2023 @ 09:34:49.391","kali-purple","created by github.com/elastic/beats/v7/filebeat/input/udp.newInputMetrics"

"Apr 23, 2023 @ 09:34:49.391","kali-purple","github.com/elastic/beats/v7/filebeat/input/udp/input.go:216 +0xa70"

"Apr 23, 2023 @ 09:33:44.735","kali-purple","github.com/elastic/beats/v7/filebeat/input/udp/input.go:242 +0xd1"

"Apr 23, 2023 @ 09:33:44.735","kali-purple","created by github.com/elastic/beats/v7/filebeat/input/udp.newInputMetrics"

"Apr 23, 2023 @ 09:33:44.735","kali-purple","github.com/elastic/beats/v7/filebeat/input/udp/input.go:216 +0xa70"

"Apr 23, 2023 @ 09:33:44.734","kali-purple","panic: runtime error: index out of range [1] with length 0"

"Apr 23, 2023 @ 09:33:44.734","kali-purple","goroutine 79 [running]:"

"Apr 23, 2023 @ 09:33:44.734","kali-purple","github.com/elastic/beats/v7/filebeat/input/udp.procNetUDP({0xc000fe3a00?, 0x1, 0x1})"

"Apr 23, 2023 @ 09:33:44.734","kali-purple","github.com/elastic/beats/v7/filebeat/input/udp/input.go:271 +0x552"

"Apr 23, 2023 @ 09:33:44.734","kali-purple","github.com/elastic/beats/v7/filebeat/input/udp.(*inputMetrics).poll(0xc000f17680, {0xc000fe3a00, 0x1, 0x1}, 0x6b203a6570797420?, 0xc000fe3790)"

"Apr 23, 2023 @ 09:32:40.118","kali-purple","github.com/elastic/beats/v7/filebeat/input/udp/input.go:271 +0x552"

"Apr 23, 2023 @ 09:32:40.118","kali-purple","github.com/elastic/beats/v7/filebeat/input/udp.(*inputMetrics).poll(0xc00056f400, {0xc0003e7600, 0x1, 0x1}, 0xc0004ffe40?, 0xc0003e7240)"

"Apr 23, 2023 @ 09:32:40.118","kali-purple","github.com/elastic/beats/v7/filebeat/input/udp/input.go:242 +0xd1"

"Apr 23, 2023 @ 09:32:40.118","kali-purple","created by github.com/elastic/beats/v7/filebeat/input/udp.newInputMetrics"

"Apr 23, 2023 @ 09:32:40.118","kali-purple","github.com/elastic/beats/v7/filebeat/input/udp/input.go:216 +0xa70"

"Apr 23, 2023 @ 09:32:40.117","kali-purple","panic: runtime error: index out of range [1] with length 0"

"Apr 23, 2023 @ 09:32:40.117","kali-purple","goroutine 233 [running]:"

"Apr 23, 2023 @ 09:32:40.117","kali-purple","github.com/elastic/beats/v7/filebeat/input/udp.procNetUDP({0xc0003e7600?, 0x1, 0x1})"

"Apr 23, 2023 @ 09:31:35.460","kali-purple","goroutine 175 [running]:"

"Apr 23, 2023 @ 09:31:35.460","kali-purple","github.com/elastic/beats/v7/filebeat/input/udp.procNetUDP({0xc0007d12b0?, 0x1, 0x1})"

"Apr 23, 2023 @ 09:31:35.460","kali-purple","github.com/elastic/beats/v7/filebeat/input/udp/input.go:271 +0x552"

"Apr 23, 2023 @ 09:31:35.460","kali-purple","github.com/elastic/beats/v7/filebeat/input/udp.(*inputMetrics).poll(0xc0007e2180, {0xc0007d12b0, 0x1, 0x1}, 0xa64726f7779656b?, 0xc0007d1040)"

"Apr 23, 2023 @ 09:31:35.460","kali-purple","github.com/elastic/beats/v7/filebeat/input/udp/input.go:242 +0xd1"

"Apr 23, 2023 @ 09:31:35.460","kali-purple","created by github.com/elastic/beats/v7/filebeat/input/udp.newInputMetrics"

"Apr 23, 2023 @ 09:31:35.460","kali-purple","github.com/elastic/beats/v7/filebeat/input/udp/input.go:216 +0xa70"

"Apr 23, 2023 @ 09:31:35.459","kali-purple","panic: runtime error: index out of range [1] with length 0"

"Apr 23, 2023 @ 09:30:30.649","kali-purple","github.com/elastic/beats/v7/filebeat/input/udp/input.go:216 +0xa70"

"Apr 23, 2023 @ 09:30:30.648","kali-purple","panic: runtime error: index out of range [1] with length 0"

"Apr 23, 2023 @ 09:30:30.648","kali-purple","goroutine 193 [running]:"

"Apr 23, 2023 @ 09:30:30.648","kali-purple","github.com/elastic/beats/v7/filebeat/input/udp.procNetUDP({0xc001478ad0?, 0x1, 0x1})"

"Apr 23, 2023 @ 09:30:30.648","kali-purple","github.com/elastic/beats/v7/filebeat/input/udp/input.go:271 +0x552"

"Apr 23, 2023 @ 09:30:30.648","kali-purple","github.com/elastic/beats/v7/filebeat/input/udp.(*inputMetrics).poll(0xc00050f400, {0xc001478ad0, 0x1, 0x1}, 0xc0002133e0?, 0xc001478860)"

"Apr 23, 2023 @ 09:30:30.648","kali-purple","github.com/elastic/beats/v7/filebeat/input/udp/input.go:242 +0xd1"

"Apr 23, 2023 @ 09:30:30.648","kali-purple","created by github.com/elastic/beats/v7/filebeat/input/udp.newInputMetrics"

"Apr 23, 2023 @ 09:29:25.874","kali-purple","github.com/elastic/beats/v7/filebeat/input/udp/input.go:216 +0xa70"

I made some further investigation and found this on github.

Seems like there's a know problem and before i will go on i will wait for the 8.7.1 fix comming out.