Hi, I have a folder with multiple files called disco_[date], when I run the comand bin/logstash -f /etc/logstash/conf.d/disco.conf and output only the stdout the dates are ok, they work and are shown in the timestamp,

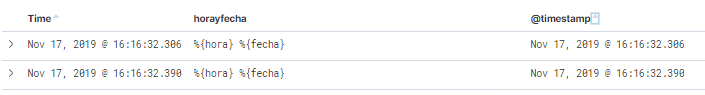

But when when I try to index them in elastic, in kibana are shown empty, and the timestamp is the date and hour when I run the command.

Any ideas why this is happening?

Is not removing the horayfecha field also.

UPDATE: I just realize its only the index for the current day that gives problems, I just re-test the grok and it works fine with the logs, but in kibana the tine stamp is corrupt...dont know whats is happening!

input {

file {

path => "/opt/logs/disco*"

# path => "/opt/logs/disco_2019_11_16.log"

start_position => "beginning"

sincedb_path => "/dev/null"

}

}

filter {

grok {

match => { "message" => "%{TIME:hora}\s%{DATA:fecha}\s%{DATA:status}\s%{DATA:server} (FileSystems%{DATA:FS}Available=%{NUMBER:Available}|FileSy$

}

mutate {

add_field => {

"origen" => "alpha"

"horayfecha" => "%{hora} %{fecha}"

}

convert => {

"Available" => "float"

"pctje" => "integer"

}

}

date {

match => ["horayfecha", "HH:mm:ss MM/dd/YYYY" ]

target => "@timestamp"

remove_field => ["horayfecha"]

}

}

output {

elasticsearch {

hosts => ["foo:9200"]

index => "discos-%{+YYYY.MM.dd}"

}

# stdout { codec => rubydebug }

}